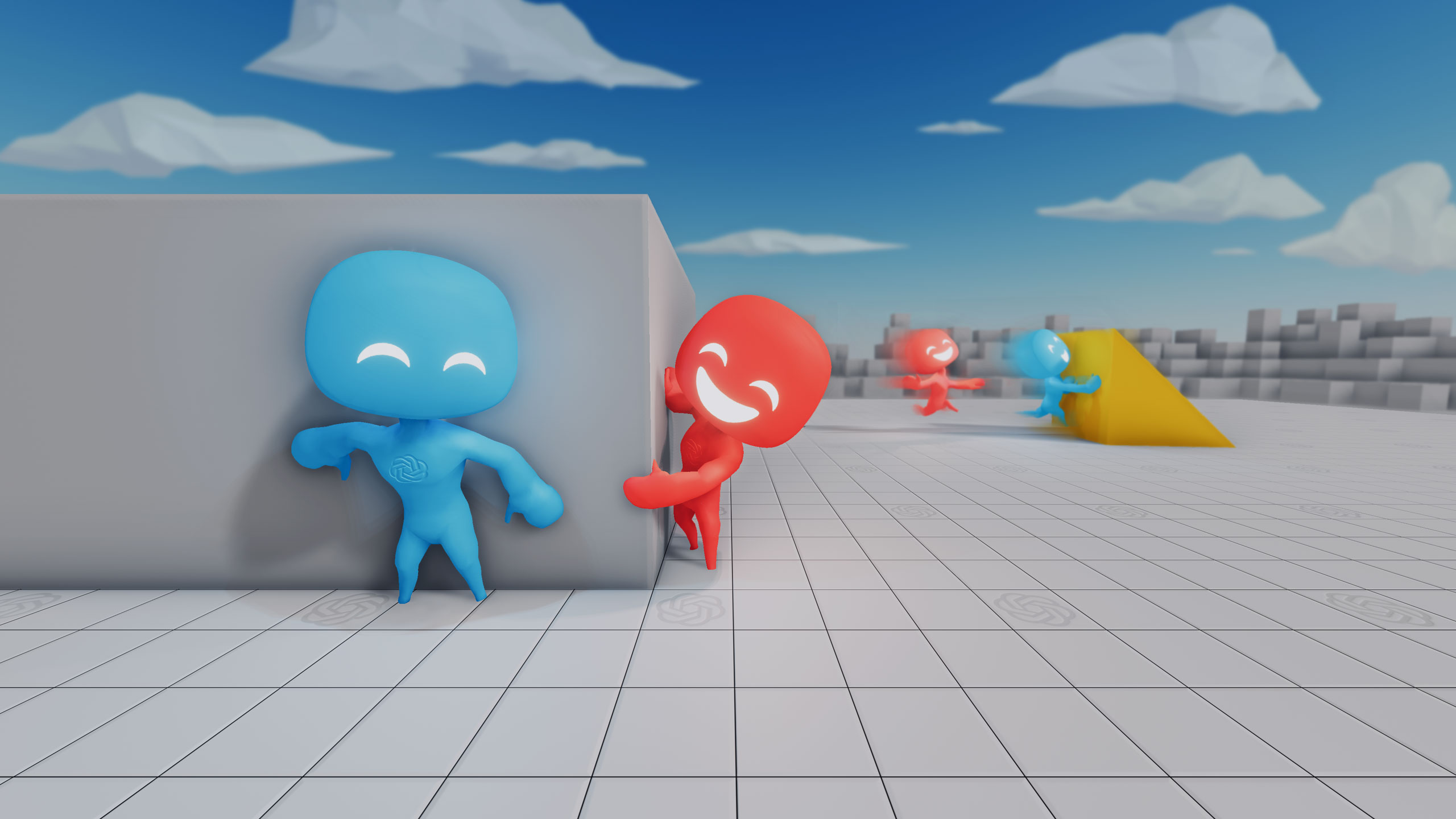

In one of their more recent releases, Emergent Tool Use from Multi-Agent Interaction, Open AI’s team observed agents discovering progressively more complex tool use while playing a simple game of hide-and-seek.

Through training in a new simulated hide-and-seek environment, agents build a series of six distinct strategies and counterstrategies, some of which Open AI’s team did not know their environment supported.

The self-supervised emergent complexity in this simple environment further suggests that multi-agent co-adaptation may one day produce extremely complex and intelligent behavior.

This experiment does not sit alone: Open AI has produced further evidence that human-relevant strategies and skills, far more complex than the seed game dynamics and environment, can emerge from multi-agent competition and standard reinforcement learning algorithms at scale.

These results inspire confidence that in a more open-ended and diverse environment, multi-agent dynamics could lead to extremely complex and human-relevant behaviour.